Artificial intelligence (AI) is revolutionizing the way we live and work. AI’s impact on the business world is immense. AI is integral to modern business operations, from automating mundane tasks to providing invaluable insights – and protecting your business’ property. However, with great power comes great responsibility. Businesses are increasingly concerned about the potential biases within AI security systems.

In 2021, Pro-Vigil conducted a poll for business owners who used AI security camera systems. We found that our audience wasn’t aware of potential bias in AI or worried about these biases cropping up in their video surveillance tools. It wasn’t an issue as long as their AI security system worked to deter crime.

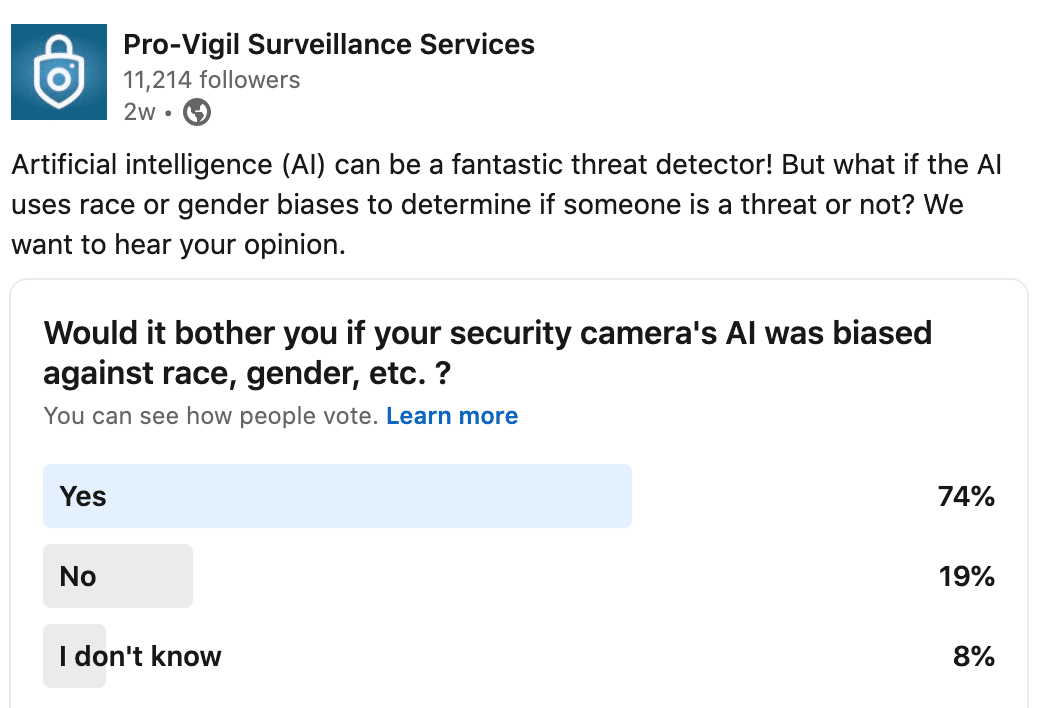

But a new poll in 2023 showed attitudes toward biases in AI video surveillance systems are changing. What is bias in AI and why should you care?

What is Bias in AI Security?

The software within your AI security camera can be biased in several ways:

-

Data Bias

The AI algorithm used in the camera may be trained on a biased dataset, resulting in unfair or inaccurate results. Suppose the dataset used to train the camera contains primarily images of people with light skin. In that case, the camera may be more accurate at detecting light-skinned over darker-skinned individuals.

-

Algorithm Bias

The AI algorithm used in the camera may be biased due to how it is designed or programmed. If the algorithm is programmed to detect specific behaviors or actions as suspicious, it may unfairly target certain groups or incorrectly identify individuals as performing actions that they aren’t.

-

Environmental Bias

The AI security camera may be biased due to environmental factors. If the camera is installed in an area with poor lighting or obscured views, it may have difficulty accurately detecting individuals, which could result in biased results.

-

Interpretation Bias

The AI security camera may be biased due to how its results are interpreted. If the personnel who use the AI security system have certain assumptions, they may interpret the camera's results in a biased manner.

AI is nothing more than computer algorithms programmed by humans. As a result, human biases can also be programmed into your AI security camera. If not accounted for, both conscious and inadvertent biases can have serious consequences for your business.

Why Bias in AI Security Systems Is a Concern for Business Owners

There are several reasons why business owners are increasingly concerned about the biases that exist within AI security systems. One of the main reasons is the potential financial impact. If an AI security system is biased and makes incorrect decisions, it can lead to financial losses for the business. If a biased AI system wrongly identifies a legitimate customer as a criminal, the business may lose any potential future revenue not just from that client, but from other customers who see this as a form of discrimination.

Business owners are, after all, rightly concerned about reputational damage. A business using biased AI security systems can damage the public’s perception of your company. This perception can lead to negative publicity and ultimately harm the business’s bottom line.

Finally, there is a growing concern about the legal implications of biased AI security systems. If a business uses a biased AI security system discriminating against certain groups, it could lead to legal action and potential fines or sanctions.

Should You Worry About AI Security Camera Bias?

Evidence shows that business owners are more aware of the potential for bias in AI platforms.

In Pro-Vigil’s recent poll of AI security system users, 74% say they are bothered by the potential for bias

But how can these businesses protect themselves from discrimination programmed into the video security system they use every day? Here are some options:

-

Choose the right technology

When selecting an AI security system, ensure it is designed to avoid bias.

-

Test and Validate

Test and validate the AI security system to ensure it does not exhibit bias.

-

Regularly Audit

Conduct reviews of the AI security system to detect and correct any potential biases.

-

Monitor Results

Continuously monitor the AI security system's results to identify any bias patterns.

-

Train and Educate

Help your employees understand the potential for bias in AI security systems and provide guidance on how to avoid it. This training may help you avoid unconscious bias in decision-making.

-

Work with Experts

Consider working with experts in AI security systems to ensure your platform fits your company.

Pro-Vigil offers our customers the best in video surveillance technology. Talk with our team today about an AI security camera system appropriate for your business goals.

Q&A

Artificial intelligence (AI) is transforming the field of video surveillance. Today’s cameras offer real-time monitoring, predictive analytics, and automated camera responses. These AI features help us improve public safety and security. However, there are also concerns about privacy and the misuse of these tools. Striking a balance between security and individual rights in the future is key to using AI software.

An AI security camera can be biased if its underlying algorithms and data sets are not diverse and representative enough. If the camera is only programmed for specific demographics, it may not accurately identify individuals outside those categories. If the underlying data is skewed toward certain behaviors, it can lead to bias.